Environment:

ESXi Hosts: Dell PowerEdge R710 x2

2 Quad port NICs – Broadcom NetXtreme II 5709c with TOE and iSCSI offload in each server

Storage: Dell PowerVault MD3200i

Connectivity:

For iSCSI configuration, we are using Software iSCSI initiator with jumbo frame support (9000 bytes MTU)

iSCSI SAN best practices guide:

http://www.dell.com/downloads/global/products/pvaul/en/ip-san-best-practices-en.pdf

As per the PDF, it is recommended that

- Each data path should be on separate subnet.

- Establish multiple sessions to storage system from each host.

- Setup one session per port from each of the network cards to each RAID controller module

Establishing Sessions to a SAN in ESXi 4.1

VMware uses VMkernel ports as the session initiators. Each VMkernel is bound to a physical adapter. Depending on the environment this can create a single session to an array or up to 8 sessions (ESX4.1 maximum number of connections per volume). For a normal deployment, it is acceptable to use a one-to-one (1:1) ratio of VMkernels to physical network cards.

Once these sessions to the SAN are initiated, the VMware NMP will take care of load balancing and spreading the I/O across all available paths.

Before proceeding, please configure the iSCSI host ports as necessary on the storage.

Step 1: Configure vSwitch and Enable jumbo frame support

In ESXi 4.1 there is no option to enable Jumbo Frames on a vSwitch from the vCenter GUI.

Create vSwitch:

esxcfg-vswitch –a vSwitch1

Enable jumbo Frames on the vSwitch:

esxcfg-vswitch –m 9000 vSwitch1

Verify the configuration:

esxcfg-vswitch -l

Step 2: Add iSCSI VMkernel ports with Jumbo Frames support (1:1 port binding)

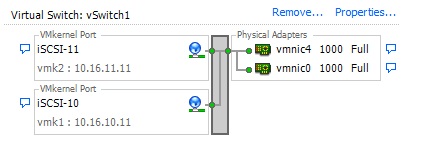

For each physical NIC designated for iSCSI connectivity create corresponding VMkernel port in 1:1 ratio. In this lab setup, we have designated two ports (one from each NIC) for iSCSI connectivityNote: We can create multiple vSwitches as well each for the designated iSCSI port, but here we are creating two VMkernel ports and assigning to a single switch – vSwitch1

Note: VMware vSphere 4.1 can have 8 iSCSI connections to a single volume.

Create iSCSI VMkernel port called iSCSI-10:

esxcfg-vswitch –A iSCSI-10 vSwitch1

esxcfg-vswitch –A iSCSI-11 vSwitch1

Configure IP address and Jumbo Frame support for VMkernel ports created:

Esxcfg-vswitch –a –i 10.16.10.11 –n 255.255.255.0 –m 9000 iSCSI-10

Esxcfg-vswitch –a –i 10.16.11.11 –n 255.255.255.0 –m 9000 iSCSI-11

Verify the configuration:

esxcfg-vmknic -l

Troubleshooting step: Login through the client and try pinging all the host iSCSI ports to see if the entire network configuration is done right.

Step 3: Assign Physical Network Adapters to vSwitch

Command to add physical NICs to vSwitch1:

esxcfg-vswitch –L vmnic0 vSwitch1

esxcfg-vswitch –L vmnic4 vSwitch1

Step 4: Associate VMKernel ports to Physical Adapters

This step creates the individual path bindings for each VMkernel to a NIC. This is required in order to take advantage of features such as Most Recently Used (MRU) and Round Robin (RR) multipathing policies or 3rd party MPIO plug-ins currently available from Dell.

By default, all the vmnics are assigned to each VMkernel port. We need to remove all but one vmnic from each VMkernel port so that each VMkernel port has only one uplink.

To remove vmnic from a vmkernel port:

esxcfg-vswitch –p iSCSI-10 –N vmnic4 vSwitch1

esxcfg-vswitch –p iSCSI-11 –N vmnic0 vSwitch1

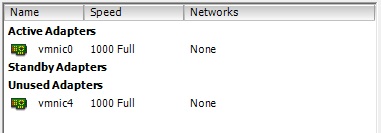

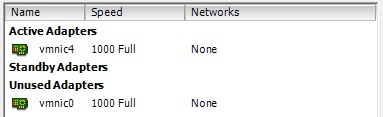

This can also be achieved by moving the extra nic adapter to “unused adapters” in the GUI configuration.

For iSCSI-10

For iSCSI-11

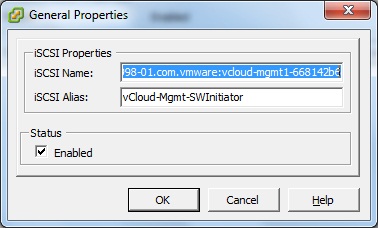

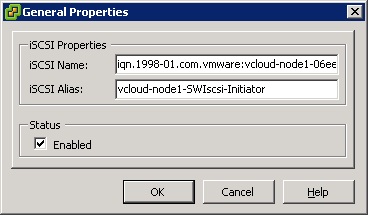

Step 5: Enable VMware iSCSI Software Initiator

Enable VMware software iSCSI initiator:

esxcfg-swiscsi -e

Verify VMware software iSCSI initiator:

esxcfg-swiscsi –q

Step 6: Bind VMkernel ports to Software iSCSI initiator:

To bind the VMkernel ports to Software iSCSI initiator:

esxcli swiscsi nic add –n vmk1 –d vmhba41

esxcli swiscsi nic add –n vmk2 –d vmhba41

To verify:

esxcli swiscsi nic list –d vmhba41

Step 7: Configure discovery IP Addresses

Configure Discovery address for the iSCSI host adapter.

Rescan the storage adapters.

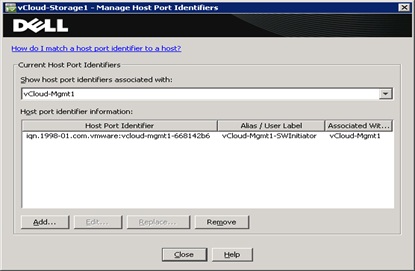

Step 8: Add ESXi host to the storage configuration and assign LUNs

By rescanning the storage adapters….an unassociated host identifier will be added to the storage, which will be used to define new host in the storage.

Step 9: Add Storage to vSphere configuration

Rescan HBA again to identify the disks in vSphere configuration to identify paths to the disks. Add storages to vSphere and then create VMFS datastores.

Step 10: Configure VMware Path Selection Policy

By configuring the iSCSI Software Initiator the way we have, we can take advantage of several multipathing policies, such as Most Recently Used (MRU) and Round Robin(RR). This allows for greater and better bandwidth utilization than in previous versions of ESX.

To verify the Path Selection Policy on a datastore, select the volume from the vCenter GUI, then select

Configure -> Storage. Highlight the datastore and select Properties. From the properties window select Manage Paths.

**********************************************************************************

iSCSI SAN configuration on vSphere 5.0

Configuring iSCSI on vSphere 5.0 has gotten lot easier. There is no need to login to vSphere Cli through command prompt to configure Jumbo Frames or associating VMKernel ports to Software iSCSI adapters.

Step 1: Configure vSwitch with VMkernel ports and Enable jumbo frame support

- Create VMkernel ports and add them to vSwitch1.

- Add the vmnic’s to vSwitch1

Enable Jumbo frame support for vSwitch1

Enable Jumbo Frame support for VMKernel ports as well.

Step 2: Associate VMKernel ports to Physical Adapters

Step 3: Enable iSCSI software Adapter and bind VMkernel ports

Click Add to bind VMKernel ports

Add both the VMkernel adapters (vmk1 and vmk2)

Step 4: Setup dynamic discovery and verify the paths created under Static discovery.

Rescan the HBA adapters as prompted.

Step 5: Add the host to Storage and configure LUNs

Create and assign LUNs to host group.

Step 6: Add datastores to vSphere

Rescan storage hba once again

Add the datastores to the vSphere and format using vmfs 5

Step 7: Configure Path selection

References:

iSCSI SAN configuration for MD3200i from Dell

http://www.dell.com/downloads/global/products/pvaul/en/md-family-deployment-guide.pdf

iSCSI SAN configuration for vSphere 4.1 from VMware

http://www.vmware.com/pdf/vsphere4/r41/vsp_41_iscsi_san_cfg.pdf

great post dude. Helped me out today Thx

ReplyDeleteEsxcfg-vswitch vs. Esxcfg-vmknic:

ReplyDeleteEsxcfg-vswitch –a –i 10.16.10.11 –n 255.255.255.0 –m 9000 iSCSI-10

Esxcfg-vswitch –a –i 10.16.11.11 –n 255.255.255.0 –m 9000 iSCSI-11

should it be:

Esxcfg-vmknic –a –i 10.16.10.11 –n 255.255.255.0 –m 9000 iSCSI-10

Esxcfg-vmknic –a –i 10.16.11.11 –n 255.255.255.0 –m 9000 iSCSI-11

Thanks man. I was struggling with my new SAN and the information about binding the VMkernel ports to Software iSCSI initiator helped me a lot.

ReplyDelete